Event report/blog post by Ilan Goodman of The Winton Centre for Risk and Evidence Communication

Event report/blog post by Ilan Goodman of The Winton Centre for Risk and Evidence Communication

In November 2019 I led a session with MJA members exploring ways to report risks from health and medical research. What follows covers some of what I talked about, and some of the points that came up in discussion.

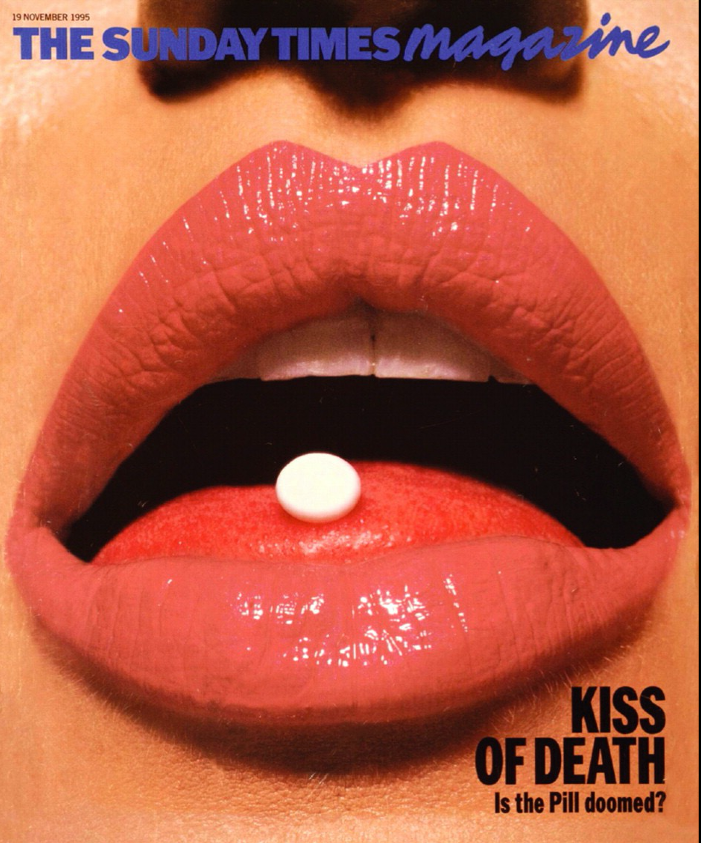

In 1995 there was a major scare over the safety of the contraceptive pill.

The UK Committee on the Safety of Medicines announced new findings that the pill doubled the risk of potentially fatal blood clots. Widespread media coverage prompted panic and thousands of women stopped taking the pill.

In the following year the number of pregnancies and abortions spiked. The ‘pill scare’ of 1995 has been credited 13,000 additional abortions (Furedi, 1999).

So, was the panic justified?

According to the research behind the committee’s announcement, around 1 in 7000 women not taking the pill were likely to develop blood clots, doubling to 2 in every 7000 women taking the pill. To put those figures another way, around 0.014% of women were likely to develop a blood clot without taking the pill, jumping to 0.028% for those taking it.

The ‘doubling’ of risk reported was wholly accurate, but wildly misleading: for an individual deciding whether to take the pill or not the increased risk was barely worth bothering about.

There are two key take-aways from this shocking bit of recent history.

First, relative risks grossly distort and amplify the perception of risk. A TRIPLING of risk might sound terrifying (especially in Daily Mail style all-caps), but a change from 1 in 10,000 people to 3 in 10,000 people getting cancer / dying / developing heart disease (or whatever) doesn’t.

Second, to make meaningful decisions about healthcare you need absolute risks. Only the absolute risk gives you a grasp of the real magnitude and helps you decide ‘is this worth caring about’? Is it worth acting on? I love this quote from Steve Woloshin and Lisa Schwartz, “Knowing the relative risk without the absolute risk is like having a 50% off coupon for selected items – but not knowing if the coupon applies to a diamond necklace or to a pack of chewing gum.”

What’s so frustrating is that – a decade and a half later – people do know this stuff, but relative risks are still commonly reported without context. It would be easy to blame journalists (it’s always “the media”, right?) but the more I’ve looked into the issue, the more I’ve found that they are not primarily to blame. The problem goes deeper.

Last summer, for example, a BMJ study following over 100,000 people found an association between sugary drink consumption and cancer. ‘Just two glasses of fruit juice a day may raise cancer risk by 50 per cent’ ran the Telegraph’s headline.

This was a striking and reasonably accurate way of reporting the study’s main finding: each 100ml increase in sugary drink consumption was associated with an 18% increased risk of overall cancer and a 22% increased risk of breast cancer over 5 years.

These, of course, are relative numbers. They are percentage increases over the baseline risk of getting cancer without the sugary drinks. The Telegraph, the Guardian and many other outlets who covered the story didn’t give any absolute risk figures, and there was a pretty simple reason: there weren’t any in the original paper or the press release.

It was only the MJA’s award winning James Gallagher, writing for the BBC, who managed it:

“For every 1,000 people in the study, there were 22 cancers. So, if they all drank an extra 100ml a day, it would result in four more cancers – taking the total to 26 per 1,000 per five years, according to the researchers.”

This is so much clearer and more useful! You could use those numbers to decide whether it’s worth ending your full-fat Coke habit and taking 5 sugars in your tea…or not.

So how did James get these figures? I pinged him on Twitter to find out. Turns out he got help. He enlisted medical statistician Dr Graham Wheeler, a cancer specialist and stats ambassador for the RSS who had been sent the press release via the Science Media Centre. Graham calculated the absolute risks himself from numbers reported in the original paper.

It’s much to my chagrin that we can’t all have a medical statistician on hand when turning around a quick article or trying to make sense of a difficult paper (it’d be convenient though, right?). What’s more, James told me that this kind of situation is common: “the issue is deeper than hyped press releases trying to get coverage. I frequently find that even going back to the paper authors doesn’t yield an absolute risk.”

Journal editors and press officers do know the importance of giving absolute risks, especially where research touches on a health issue of public interest. The Lancet press office is well known for doing a good job here. It’s also written into the guidelines of a number of journals including the BMJ’s. So why isn’t it happening consistently?

The impression I get is that it’s often down to inertia and lack of incentive. Providing absolute risks in research write-ups isn’t common practice. Plus they’re likely to make the research sound less exciting, because the relative risks always sound more dramatic.

For their part, press officers are often dealing with very limited power and limited time. As one press officer and MJA member and told me, she would only ever include numbers directly from the research paper in a press release and wouldn’t feel comfortable pestering the authors for absolute figures if they didn’t also appear in the published study.

In the end, the responsibility falls to each of us in different ways. Journal editors should do a better job insisting authors include absolute risks, especially for research likely to attract public and media interest. It’s often a fairly trivial job for researchers to calculate them (depending on the study design) so there’s no excuse. Press officers should similarly push authors to provide absolute risks – in my view, even if they aren’t included in the published paper. They have a responsibility to the public alongside the professional incentive to generate coverage.

What can journalists do? Apart from needling press officers and editors to include absolute risks, it’s also worth pestering the corresponding authors. If they are unforthcoming, there are may be other ways of proving helpful context with other publicly available stats. These are trickier options – they require more work and you’ve got to be careful that you’ve interpreted the original stats correctly.

To give you an example, my boss and I (the legendary statistician and professor of risk David Spiegelhalter) tried to practise what we preach, writing a piece for BBC Science Focus contextualising the widely covered finding that ‘ultra-processed food may increase risk of death by up to 60%’. We used ‘annual risk of death’ stats from the National Life Tables to put this alarming number into perspective.

Absolute risks for different cancers can often be found on Cancer Research UK’s excellent website, and the 10 year risk of having a heart attack or stroke can be estimated with the QRISK 3 tool. These are just examples of sources you can use to contextualise a relative risk where the press release or study doesn’t give the absolute (or baseline) risks. But depending on the topic of a paper it’s not always easy to find the relevant stats.

To try and support journalists and press officers who want to provide absolute risks, my group at the Winton Centre for Risk and Evidence Communication are developing a kind of online calculator called RealRisk. We need people to help us test and improve the site, so if you want to help please get in touch with me (ilan.goodman at maths.cam.ac.uk)!

The best option, of course, is if the study authors calculate the absolute risks pertinent to their own research. So my new year’s resolution is to keep protesting and complaining and writing blog articles (etc.) when they don’t. Join me and eventually they’ll listen!

A quick risk-related tip to finish: follow RelativelyRisky on Twitter. It’s run by Australian blogger and health writer GidMK. Each tweet is just a link to reported piece of research, followed by the relative risk and the absolute risk – which he calculates himself. Simple, smart, delicious.

Recent Comments